rethinking bias in AI

The human cognitive bias pareidolia has shaped our culture. Like the Man in the Moon, it can be a significant piece of folklore. We consider these human flaws full of meaning, but yet fail to extend the same grace to the artificial intelligence that we are building.

I had the pleasure of doing this talk at TEDx Calgary for their Navigators event in November, 2018. It was an incredible experience and the volunteers created a very memorable event that I was happy to be a part of.

Here is a lightly edited transcript of the talk.

The Discovery of the Pebble of Many Faces

Three million years ago, one of your and my hominid ancestors was living in the Northern part of what is now South Africa. This was over 150,000 generators ago in our family tree. Our great grandparents 150,000 times over.

This individual was the cause of a mystery that remained unsolved until 1974.

Our ancestor came across this brownish-red pebble and picked it up. It was slightly larger and slightly heavier than a billiard ball. And it was probably quite easily visible - the deep red color of the jasperite was unique and it would have stood out from other pebbles and grasses. After picking it up, our ancestor carried this stone 32 kilometers to their home. If they walked directly, this would be at least a 6-and-a-half-hour hike. Walking the 32 kilometer distance wasn’t uncommon for these individuals. They foraged for food so walking to find it was a common daily activity. But it was uncommon for them to carry a relatively heavy stone with them for that whole journey. Human scientists excavating the caves millions of years later knew that it must have been important for them to have carried it such a distance. Why?

The key to solving the mystery was to understand a brain that was similar, yet still different from ours. This is what fascinated me about this story, since throughout my career, I’ve been building artificial brains to help computers understand the human world. I help artificial intelligence understand our attitudes, behaviours, language, and perception. And it’s very hard to understand a brain that is different from our own.

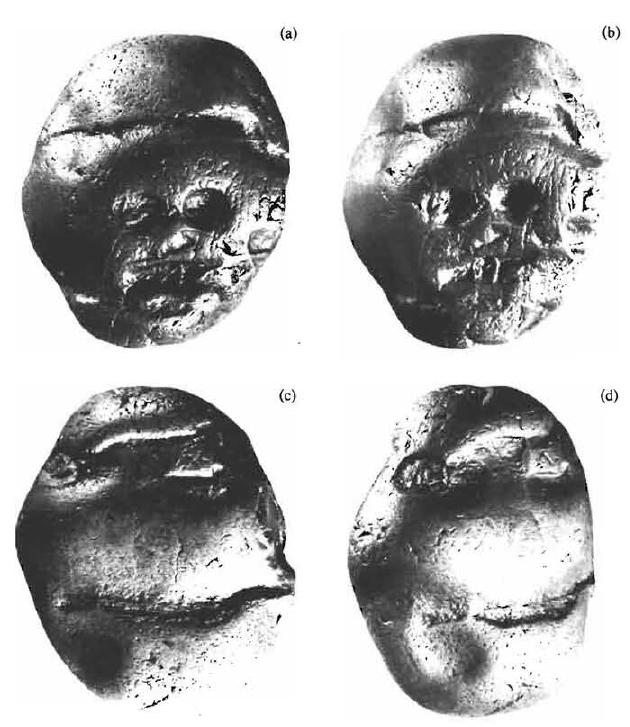

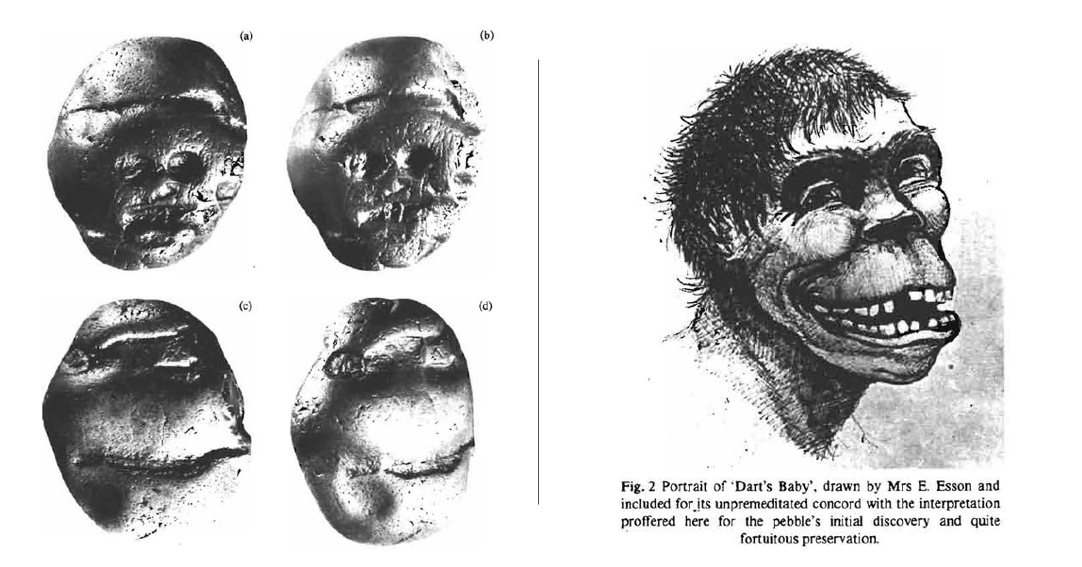

The “large brained” face on one side was obvious to the human paleoanthropologists. It’s hard to miss. But it wasn’t a human that picked it up; it was an australopithicus. This individual would have much more readily recognized the face on the other side of the stone that looked much more like themselves.

Why we see faces: Pareidolia

This is the earliest known example of pareidolia. Pareidolia is the phenomena where we perceive a familiar pattern where none may really exist. Our vision and auditory systems are especially prone to this phenomenon. We are not able to control it. One of the most famous pareidolic images is the "Face on Mars", taken by NASA's Viking I in 1974. The image fueled belief in a long-lost civilization on Mars by many who saw it. Unfortunately it was just an illusion due to pareidolia.

There are also hundreds of Mimetoliths, or rock faces, around the world. This is the Grey Man of Merrick, in southern Scotland. Hikers travel long distances to see them.

Even the moon has been the subject of many stories. I grew up seeing the face of a person in the moon. “The Man in the Moon” was a story that my friends and family and I shared. But there are many other stories. Some see a rabbit pounding herbs or a woman carrying a bundle of sticks. These images are woven into folklore and customs.

Pareidolia goes deeper than just seeing the shape of faces or animals. Just like the Pebble of Many Faces, our reactions can be strong. We perceive emotion. In a social society like ours, it was always important to be able to quickly assess the mental state of an individual. Were they hostile? Happy? Afraid?

The Pebble of Many Faces is so important because you can see different emotions or personalities in each orientation of the stone. The recognition of the faces in this pebble’s random marking was a significant point in the development of cognitive abilities in our ancestral tree.

So I wondered: If our brains are prone to see these apparitions, and we are creating artificial intelligence to see as we see, what is AI prone to see?

Machine Vision

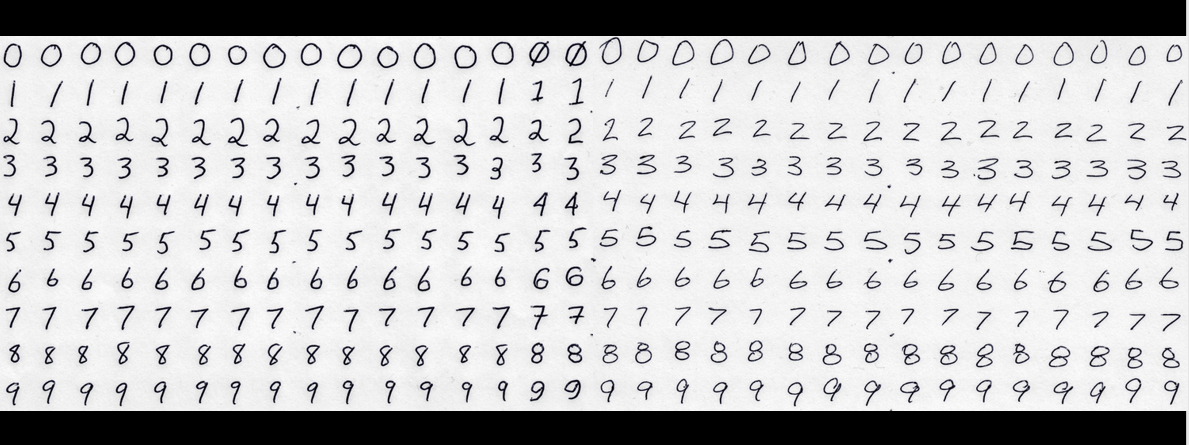

Early in my career, machine vision was what drew me into artificial intelligence. I wanted to know why sometimes it was so hard for machines to do something that seemed so simple. Why was recognizing letters and numbers was so hard for computers, when it is one of the first things we learn to do as children?

And even now, 15 years later, this problem is not solved. We’ve all seen this – when a captcha asks us to prove we’re not a robot by typing in the numbers and letters we see. Machine vision not only lets computers recognize letters and numbers but all the different objects in our world. It’s what will allow self-driving cars to safely navigate busy streets or search through your vacation photos.

How does AI see and understand what it’s looking at? Computer vision systems are set up to mimic our vision system.

We can think of our vision system as composed of:

1. Our physical eyes, which determine how information comes into our visual system.

2. Our brain’s structure, or how the brain is connected.

3. Our brain’s learning mechanism, how connections between neurons in the brain strengthen throughout our lives.

4. The history of what we have seen allows us to understand new images.

In parallel, AI has:

1. Inputs into the system, which might be pixels of an image or groups of pixels.

2. A structure that mimics the neural structure of our brain.

3. A learning mechanism to change this structure as it learns.

4. Data that is used to train it.

To recognize objects In this photo, the neural network I used is trained on a data set with over 4 million objects identified by human reviewers. It uses a specific type of neural network that understands the horizontal and vertical dimensions of the input data, just like our eyes.

It shows one of my favorite places. Pier Seven in San Francisco. You can see that the captions correctly identified the light poles, the railing, the sky. Even the Bay Bridge in the background is identified.

Unfortunately, just like our own visual system, AI can make mistakes. They can come from the input, if we ignore important information, say, using black and white photos instead of color photos. They might stem from the structure of the neural network itself and we still don’t know what the ideal structure is for many different cases. We might incorrectly update the structure as the machine learns, and it is especially challenging when the systems becomes more complex. And finally, the information used to train the neural network might lack important examples, which can prevent it from accurately learning about all objects we might want to.

All of these reasons are parallel to our own visual system, it’s similar to the reasons that we make errors in object identification. Color blindness, cataracts, or other vision problems can affect the input. A brain injury can cause irreparable damage to the normal structure of the brain and neurological issues can cause an inability to learn or remember. And finally, a lifetime of limited experience can give us a skewed view of our world.

I wanted to understand how this sophisticated artificial intelligence understood the human likeness. And, in this particular data set, there are over 450,000 instances of people. The neural network architecture was also created specifically to mimic humans’ visual systems. This neural network was also, just like humans, biased towards seeing people.

So what would it see, when I showed it the artifact that was so important to our ancestors? The pebble of many faces?

Pareidolia Experienced by AI

When I showed it the Pebble of Many Faces, the AI was seeing the strangest things:

The nose of a giraffe

The ear of a horse

The bottom of the banana

A large brown apple

This is a tree

This is a bird

I was struck by the sheer number of ridiculous mistakes that the AI made. The errors it was making were incomprehensible to humans looking at it. A human would be extremely unlikely to have made these same mistakes and the AI was seeing a plethora of things that humans weren’t. It had its own pareidolia, that we humans are unable to see.

Rethinking Bias in Machine Learning

Usually we think of bias in machine learning systems as an error to be avoided, a mistake. It might be innocuous like Siri mishearing us when we ask for the weather, or it could be serious and life changing, like unfairly denying parole to an individual who is unlikely to re-offend.

We consider these errors that will be fixed by better algorithms, more advanced technology, and larger data sets. Most of the time we are right to think of it this way: there are significant dangers to ignoring or downplaying the bias that creeps into the machine learning systems that we build: systematic inequality, lack of opportunity, and prejudice. Sometimes when we see ourselves reflected in what we build we don’t like the result.

But thinking of it only as a deficit denies our own biases and flaws as only deficits. Especially when our own pareidolia, which is a bias in our cognitive system, we consider full of meaning. Like the Man in the Moon, it is a commonality that connects us with one another. It has inspired stories that have shaped human culture for millennia.

I think that we should extend this graciousness to the AI that we are building. Instead of dismissing errors that AI makes as problems to be overcome, we respect them as meaningful, and a window into another, artificial mind.

Our visual system evolved to be wired for what was important to our survival. What was important for our own history. Three million years ago, a rock that was recognized as the face of a friend or relative was so significant that an individual carried it for over 6 hours.

And even if we didn’t know it, this pebble was significant for us, three million years later. This artifact is considered by many to be the roots of art.