Pareidolia

Last week I found that computer vision systems experience pareidolia, the phenomena of seeing patterns where none exist, in a completely different way that humans do.

Through data analysis of a set of pareidolic images, I showed how different the underlying visual systems are.

Humans see patterns that AI does not, and vice versa.

I decided to explore this theme in more detail. Could a human trick a computer vision system? Another human's vision system? Would a human change the image in the same way as an AI to meet the same goal?

Ultimately, I would like to figure out what makes images pareidolic to humans. The gap between humans and AI underscores a major problem with current methods. If we could better understand how neural networks would have to work in order to be congruent with human vision, the technology would improve immensely.

Why does pareidolia matter?

Three million years ago, our hominid ancestors developed symbolic thought, and began to appreciate pareidolic objects. It influenced early culture and belief systems. Social cues become increasingly important, so understanding faces, especially faces that looked like yours, was essential to survival.

AI we're developing has not been created in the same way. We have no way of knowing the eventual repercussions of this difference, but an autonomous vehicle recognizing pedestrians, facial recognition as used to find criminals in security footage, and noticing civilians in surveillance are clearly extremely important social and technological considerations.

(Please see the full article about this here)

THE EXPERIMENT

I decided for a first experiment to focus on handwritten digits. Recognizing writing is a crucial human skill, and the shapes of digits are more abstract than full photographs. The invention of writing is one of the most important events in our human history.

Technologically, it has been an interesting area of research for many years. Digit recognition has been tackled by almost every machine learning method out there, including most recently, convolutional neural networks, which has bested performance of other methods like Support Vector Machines, k-Nearest Neighbors, and many others.

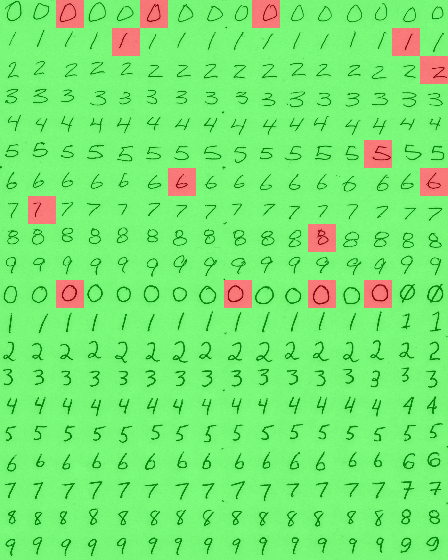

The original data

We created a dataset of digits drawn by two different people*. Each person drew 16 iterations of each digit between zero and nine for a total of 320 examples of digits. I then scanned them and digitized them for input into the neural network.

The adversarial data

Then we attempted to turn each digit into an 8. We each did half of our own digits and half of the other person's digits. We chose 8 for two reasons: It’s one of the heaviest letters so it would require drawing the most on our initial digits, and second, it’s back to school season and we figured every student might like some advice about turning 50’s, 60’s, and 70’s into 80’s.

Our rules for making the 8's was to add as little additional ink as possible, and to avoid going over the original digit that was drawn. For instance, we didn't want to simply draw an 8 over the original digit.

Our first surprise was just how challenging adding adversarial markings was, especially in the case of the 0's and 2's. Try it yourself!

As with the original digits, I scanned it and digitized them for input into our neural network.

The neural network

I used a convolutional neural network trained on the MNIST dataset of handwritten digits for the analysis.

The original data

The adversarial data

RESULTS

Human Ratings

After we had turned all of our digits into 8's, we then each rated the digits we created on a three point scale:

Convincingly an 8

Looks more like an 8 than anything else

Does not look like an 8

The two rows of 8's were left out of the rating for obvious reasons.

We averaged our individual scores for the final scoring. In general, we felt that the transitions of 1 to 8, 3 to 8 and 7 to 8 were the most convincing, and 0 to 8, 4 to 8 and 9 to 8 the least convincing. When we looked at the 2's, we found that each of us had a different 2 style. The loop 2 was much harder to turn into an 8, and therefore the results less convincing, than the non-looped 2.

Human ratings of our adversarial examples. The dark green represents the top score (most convincing as an 8) and dark red the lowest (least convincing) score.

We both agreed that 96 (33%) of our adversarial examples were convincingly an 8, and in another 52 cases (18%), one of us was convinced while the other felt it looked most like an 8 compared to anything else, but wasn't completely convinced. This means that just over half of our examples mostly tricked humans.

Happily for the students coming home with 70's and want to make them 80's, our results were fairly good. Over three quarters (78%) were convincing to at least one of our human judges. A total of 62% of the 6's were convincing to at least one of us, giving hope to students getting 60's.

We also found it was pretty successful to turn 1's and 3's into 8's so you might be able to get a little bit of a boost that way. (If you're coming home with 10%'s or 30%'s on a test we suggest you direct your energy towards studying and not trying to trick human vision systems.)

AI Ratings

But would our AI be so easily convinced with our handiwork? To find out, we fed the digits into the neural net to see whether it would be tricked by our adversarial examples.

How convincing our adversarial examples were to the neural network. Green means the AI classified it as an 8 while red means it did not.

We had about the same success tricking the AI as tricking ourselves. The AI saw 8's in our adversarial examples 91 times out of 288 (32% of the time).

Both humans and AI agreed that the 7-to-8's and the 1-to-8's were most convincing. But we diverged in our ratings for several others. While the AI found the 2-to-8's and 4-to-8's moderately convincing, we did not. We thought our 6-to-8's and 3-to-8's were convincing but our AI colleague did not agree.

AI Generated Adversarial Examples

What if you could ask your AI to fudge those grades for you? I decided to find out.

I got the neural network to do the same task we had done: to turn each of our digits into eights. Using the architecture of the neural network we mathematically generated pixel-level dots of ink that would cause the prediction to be an 8. As the widely-used term "adversarial noise" suggests, they just looked like noise on the image.

It wasn’t perfect and some of the times it tried to create an 8 from our examples, it wasn’t able to. But still, it succeeded on all but 15 examples - much better than our performance.

Needless to say, when we looked at the adversarial examples it generated, we found none of them convincing! Clearly, the AI was seeing something completely different from what we were observing in the image.

Don't get an AI to try to trick human observers, at least using this method!

Stitched together adversarial examples generated from our neural network.

Prediction of our computer generated adversarial examples

CONCLUSION

Just like we all learned in school, one delicate swoopy line is all that separates a B from an A (well, 78% of the time). But don't ask an AI to try to make it for you, unless it's an AI that has to be tricked! Our AI generated examples were not convincing to our human judges at all.

Of course we're not really recommending changing your grades if you're a student, but if you're a parent, now you know the tricks of the grade-changing trade.

But back to the AI. Although some overlap exists in the way AI sees the world and how humans do, there are also some pretty major differences. When humans tried to trick human and AI vision, we were moderately successful. AI is extremely good at tricking itself, but it does so in a completely different way than humans.

Given the performance of the neural network in image recognition last week, the results weren't all that surprising. The fact that AI doesn't recognize images that are pareidolic to humans, and sees pareidolic patterns that humans don't see is important to how to trust and use computer vision technology.

Thanks for reading.

Read more about my experiments with Pareidolia

*Special thank you to my significant other for helping me generate the dataset. When I asked “Hey, did you think you were going to be spending your Saturday creating adversarial examples to trick a neural net?” the reply I got was “Well, I do know you well enough that it really wasn’t completely outside the realm of possibility….” I think that says it all.